Another AI model has joined the sea of AI models and this one’s from OpenAI yet again. OpenAI previously released GPT-4o this year which was supposed to be more efficient than GPT-4. But it was still quite expensive and could rack up quite a bill, especially for developers who need to call the AI model through the API for their apps repeatedly throughout the day.

As a result, developers were turning to cheaper small AI models from competitors, like Gemini 1.5 Flash or Claude 3 Haiku.

Now, OpenAI is releasing GPT-4o mini, their most cost-efficient model yet, with which they’re also entering the small AI model space. While the GPT-4o mini is their cheapest model yet, it is not achieving that low cost by cutting back on intelligence; it is smarter than their existing GPT-3.5 Turbo model.

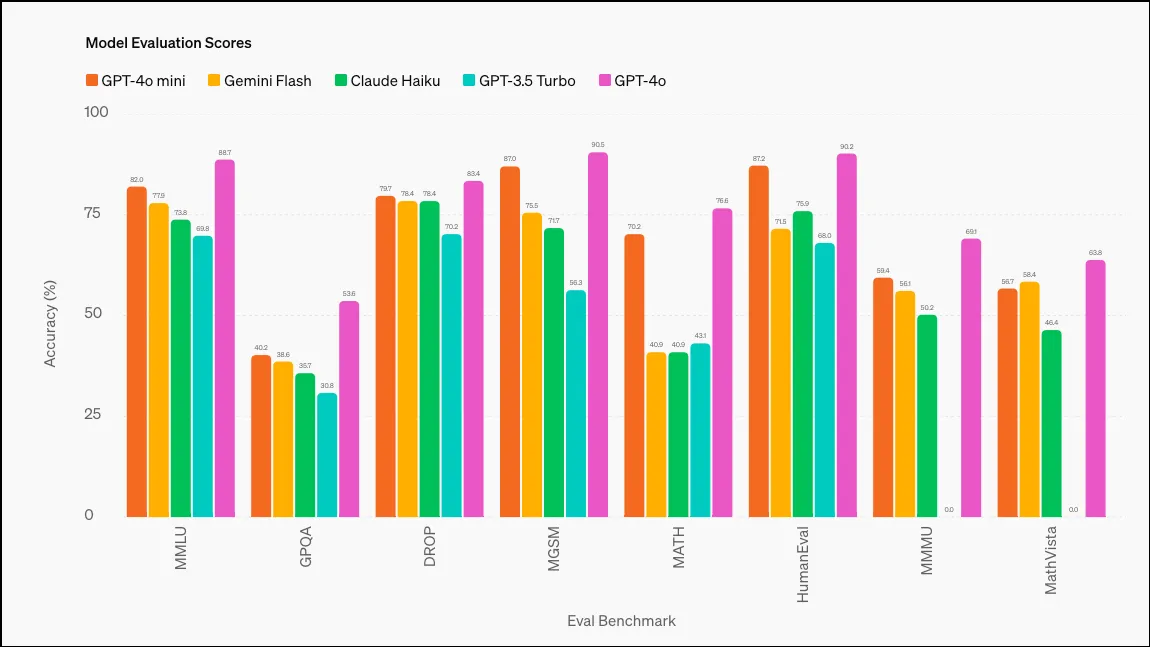

According to OpenAI, GPT-4o mini scored 82% in MMLU (Measuring Massive Multitask Language Understanding), outperforming many models; these are the respective scores of different models: GPT-3.5 Turbo (70%), Claude 3 Haiku (75.2%) and Gemini 1.5 Flash (78.9%). GPT-4o scored 88.7% on this benchmark, with Gemini Ultra boasting the highest score – 90% (these are not small AI models, though).

GPT-4o mini is being rolled out to ChatGPT Free, Team and Plus users as well as developers today. For ChatGPT users, it has essentially replaced GPT-3.5; GPT-4o mini will be the model the conversation defaults to once you run out of free GPT-4o queries. Developers will still have the option to use GPT-3.5 through the API, but it will eventually be dropped. ChatGPT Enterprise users will get access to GPT-4o mini next week.

As mentioned above, the focus of GPT-4o mini is to help developers find a low cost and latency model for their app that’s also capable. Compared to other small models, GPT-4o mini excels at reasoning tasks in both text and vision, mathematical reasoning and coding tasks, as well as multimodal reasoning.

It currently supports both text and vision in the API, with support for text, image, video, and audio inputs and outputs on the roadmap for the future.

GPT-4o mini has a context window of 128K tokens for input and 16K tokens for output per request, with its knowledge going up to October 2023. It can also handle non-English text rather cost-effectively.

Deixe um comentário